In a new study published in PNAS, we studied how people form and revise their perception of a risk, and how their evaluation of a danger is influenced by the judgment of their peers.

For risk experts, the assessment of danger results from a very pragmatic calculation: Simply multiply the probability that an accident would happen with the damage that this accident would cause, and you will obtain a number that describes how serious is a risk. In such a way, plane crashes or nuclear explosions are low risk because their probability is so low that it compensates the potentially high number of loss when they happen, but car accidents, smoking, or over-exposure to the sun are high risk because they are very frequent although they affect just a few persons at a time.

Yet, public perception of risk is often at odd with experts’ assessments, because people do not calculate such probabilities. Instead, they form risk judgments in a much more intuitive and emotional way, on the basis of various features of the risk.

In this study, we wanted to know to what extend people’s perception of danger is influenced by the judgment of their friends and relatives, and how this process of social influence plays out in large populations of interacting people.

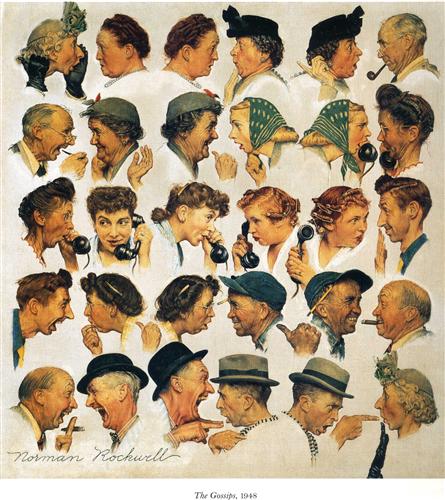

For this, we used a nice experimental paradigm that was pioneered by Frederic Bartlett more than 80 years ago: The telephone game. The experiment is very simple: The first participant receives a message and is instructed to communicate it to a second individual, who in turn communicates this information to a third person, and so on until the end of the chain (10 subjects in our experiment), as illustrated by this famous painting of Norman Rockwell.

The interesting variation in our experiment, is that the communicated message was not just a neural story, but a comprehensive set of information about the benefits and harms of a controversial and widely used antibacterial agent called Triclosan. Therefore, participants intuitively combined this message with their own subjective judgment of the risk before passing it to the next subject. In such a way, we managed to mimic how a rumor would spreads in a social network, directly in the laboratory.

With no surprise, we found that the initial message was seriously distorted as it spread down the chain. We ran 15 chains in parallel. The message that arrived at the last participant was almost completely different between the chains, sometimes focusing environmental damages, on breast-feeding, or on Greenpeace protests. At the end end the chain, the message was furthermore much shorter than the initial imput, and contained a lot of wrong information.

The more important finding is that the signal of the message was also distorted. The degree of risk that the message carries became gradually more extreme over repeated social transmissions. As it spreads down the chain, the message became either more alarmist or more reassuring, the direction of the change being determined by the initial judgmen of the subjects. In other words, people who believe that the risk is low tend to tone down the dangers during their communication (e.g. they may forget to mention some critical side effects), while those who are initially worried tend to amplify the risk (e.g. they may forget to mention some existing regulations).

In other words, people act as a filter during risk communication, by ‘coloring’ the message according to what they initially thought about the risk. Therefore, instead of changing people’s mind, the message mutates to fit people’s judgment.

With this social process, the worst-case scenario happens when a risk message spreads within a community of like-minded people – which is arguably also the most common situation. In that case, any risk message would mutate rapidly to fit the opinion of the community. Pieces of information that contradict the view of the community will disappear, while those that support their judgment will be highlighted.

Ultimately, the message can have a counter-intuitive polarizing effect on the population: After a few rounds of distortion, the message can strengthen the existing bias of the group, even though it initially supported the opposite view.

This study demonstrates that people’s risk perception is – at least in part – the outcome of a social process.

More information is available in the original publication (open-access), and in the supporting information.